Copyright © 2025 LexisNexis and/or its Licensors.

By: Romaine Marshall and Jennifer Bauer, Polsinelli PC

This text addresses the broad scope of synthetic intelligence (AI) legal guidelines in the US that target mitigating danger, and discusses the patchwork of legal guidelines, rules, and trade requirements which can be forming duties of care and authorized obligations. In lieu of a well-regulated trade with established authorized frameworks round AI, professionals fascinated about mitigating AI dangers inside their companies might want to contemplate different alerts reminiscent of Federal Commerce Fee (FTC) enforcement tendencies and the Nationwide Institute of Requirements and Know-how’s (NIST) AI Threat Administration Framework (AI RMF), to establish, consider, and mitigate AI dangers.

NIST AI Threat Administration Framework

The NIST AI RMF supplies voluntary steering for managing AI dangers all through its life cycle to make sure reliable AI fashions. It outlines traits reminiscent of validity, reliability, security, and transparency, amongst others. The framework suggests danger administration methods like AI danger administration insurance policies, AI system inventories, and AI incident response plans. It additionally highlights the significance of balancing these traits based mostly on the AI’s use case.

The relevant use case requires a sensible interpretation of the broad dangers proposed. The accompanying NIST CSF 2.0 Implementation Examples present better clarification. A high-level overview of the chance administration methods, dangers, and controls we usually suggest encompassing in such a program is enclosed within the under visible.

Federal Commerce Fee Enforcement Actions

The FTC has been lively in addressing improper AI use and improvement, as seen in circumstances in opposition to Ceremony Help Company and 1Health.io Inc. These circumstances underscore the significance of correct information dealing with, transparency, and adherence to privateness insurance policies. The FTC’s actions function a warning to corporations in regards to the penalties of retroactive privateness coverage modifications and insufficient information safety measures.

State Approaches to AI Governance

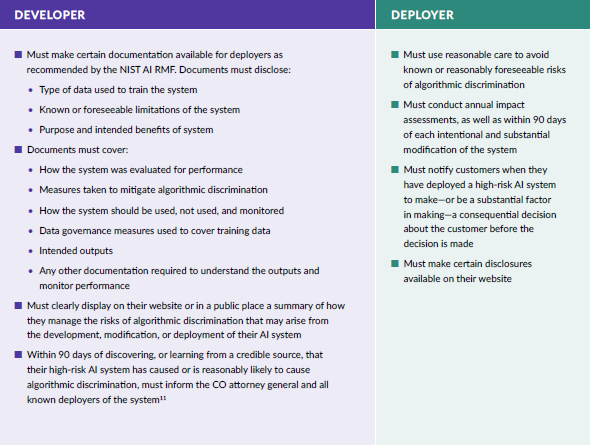

Within the absence of federal AI legal guidelines, states like Utah and Colorado have enacted their very own laws. Utah’s AI invoice focuses on transparency and information privateness, whereas Colorado’s AI Act, influenced by the NIST AI RMF, imposes necessities on builders and deployers of high-risk AI programs. California has additionally handed AI-related payments, emphasizing disclosure and danger assessments in collaboration with trade leaders.

The Colorado Act imposes standards for deployers and builders and an affordable obligation of care to guard customers from dangers:

Holistic AI Threat Administration

The doc outlines key takeaways for creating an AI danger administration technique, and recommends:

- Take into consideration AI dangers and legal guidelines expansively.

- Use holistic options like these outlined within the NIST AI Threat Administration Framework.

- All the time contain people in any AI-enabled processes and decision-making.

- The most effective answer is commonly the best: Be proactive and clear in soliciting knowledgeable consent.

These methods goal to scale back enterprise danger and guarantee compliance with evolving authorized and regulatory frameworks.

The above info is a abstract of a extra complete article included in Sensible Steering. Customers may view the complete article by following this link.

Not but a sensible steering subscriber? Sign up for a free trial to view this complete article and different present AI protection and steering.

Romaine Marshall is a shareholder at Polsinelli PC. He helps organizations navigate authorized obligations referring to information innovation, privateness, and safety. He has in depth expertise as a enterprise litigation and trial lawyer, and as authorized counsel in response to tons of of cybersecurity and information privateness incidents that, in some circumstances, concerned litigation and regulatory investigations. He has been lead counsel in a number of jury and bench trials in Utah state and federal courts, earlier than administrative boards and authorities companies nationwide, and has routinely labored alongside regulation enforcement.

Jennifer Bauer is counsel at Polsinelli PC. She has in depth expertise in world privateness program design, analysis and audit, regulatory compliance and danger reporting, remediation, and information privateness and cybersecurity regulation. She is a trusted and skilled chief licensed by the Worldwide Affiliation of Privateness Professionals. Jenn has remodeled the privateness packages of 5 Fortune 300 corporations and suggested blue-chip corporations and huge authorities entities on information privateness and safety necessities, regulatory compliance (significantly GDPR / CCPA), and operational enchancment alternatives.

Associated Content material

|

For extra sensible steering on synthetic intelligence (AI), see |

|

For an summary of proposed or pending AI-related federal, state, and main native laws throughout a number of apply areas, see |

|

For a survey of state and native AI laws throughout a number of apply areas, see |

|

For a dialogue of authorized points associated to the acquisition, improvement, and exploitation of AI, see |

|

For an evaluation of the important thing concerns in mergers and acquisitions due diligence within the context of AI applied sciences, see > ARTIFICIAL INTELLIGENCE (AI) INVESTMENT: RISKS, DUE DILIGENCE, AND MITIGATION STRATEGIES |

For a have a look at authorized points arising from the elevated use of AI and automation in e-commerce, see |

|

For a guidelines of key authorized concerns for attorneys when advising shoppers on negotiating contracts involving AI, see |

Sources

The NIST Cybersecurity Framework (CSF) 2.0.

National Institute of Standards and Technology, CSF 2.0-Implementation Examples.

FTC v. Rite Aid Corp., No. 23-cv-5023 (E.D. Pa. Dec. 19, 2023).

FTC v. Rite Aid Corp., No. 23-cv-5023 (E.D. Pa. Feb. 26, 2024).

1Health.io Inc., 2023 FTC LEXIS 77 (Sept. 6, 2023).

See Utah Synthetic Intelligence Coverage Act, Utah Code Ann. §§ 13-72-101 to -305.

Colo. Rev. Stat. §§ 6-1-1701 to -1707.

Colo. Rev. Stat. § 6-1-1701(6) and (7).